Nowadays, technology is becoming increasingly present across many domains, performing many complex tasks, including pattern recognition and data analysis. Among these is healthcare domain, which is benefitting from these High-Tech concepts to support human professionals. For instance, AI can help doctors with the diagnosis of certain diseases, such as cancer. Machine Learning, the concept which allows computers to learn from data, derive prediction models, and thus predict the likelihood of certain patterns (re)occurring, is at the very centre of this revolution.

Although AI and Machine Learning has the potential to support many domains, there are some in which we need to take precautions and set specific limitations to the actions. For instance, Machine Learning in healthcare deals with sensitive personal data, raising issues with privacy, which must be guaranteed at all times. Therefore, AI for healthcare must guarantee specific features to be applicable in healthcare solutions, and privacy protection is only one of many of these features.

FAITH pledges to handle the sensitive information of users with utmost care. This responsibility forms the cornerstone of our technology design and implementation processes. This is reflected in FAITH across multiple dimensions including, for example, in the efforts to gain user trust and acceptance, as well as fostering usability. Central to our privacy-conscious technology development is the application of Federated Learning (FL) principles when designing the platform architecture. Truly, this can be a game-changing approach to data collection and AI modelling. In FAITH, and for many applications across healthcare and other domains, such as entertainment and online retail, a model’s most important source of information is the data it has about the user.

Decentralising data management to enhance privacy – FAITH Federated Learning

The usual practice involves training a centrally located AI model with user data from various sources, enhancing its accuracy and efficacy. Federated Learning, however, empowers the users with their own unique versions of the AI model. While the foundation for the training of the AI model remains uniform, each user’s local model will have distinct parameter values that mirror their personal data, the very essence of Federated Learning.

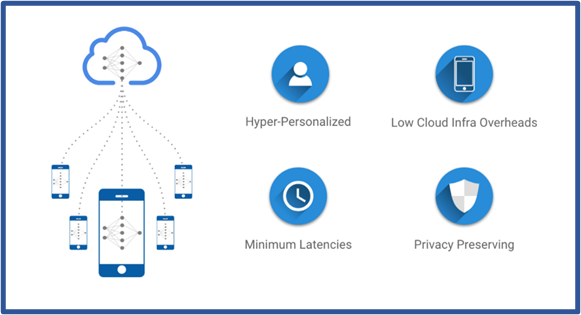

In the FL ecosystem of FAITH, each deployment of the FAITH Mobile App on a participant’s smartphone includes a client, which holds a unique version of the AI model. This version of the model trains on the collected user’s data locally on the device. Then, FL-enabled libraries send back the aggregated updates to the central repository, without transferring any personal user data. These aggregated updates are consolidated and applied to the AI model to further refine and enhance it. This enhanced model is then further disseminated to the individual clients, and this training cycle continues.

Federated Machine Learning Structure and Benefits

Federated Learning benefits for privacy and personalised AI

The development of our Federated Learning (FL) prototype has been a methodical journey, marked by careful planning, rigorous testing, and strategic implementation.

In the initial stages, we developed a rudimentary proof of concept, simulated exclusively with code and fed with datasets from the literature. The purpose of this stage was to confirm the feasibility of an architecture design based on FL principles and to gain a foundational understanding of its intricacies, without the complexity of real-world data and multiple clients. We aimed to verify the core proposition of FL, namely its ability to offer enhanced privacy protections.

As we gained confidence in the concept, our attention turned towards building the foundational components of Federated Learning. Our efforts focused on implementing federated averaging, a pivotal algorithm in the FL space. This decentralised machine learning approach enables AI models across devices to independently learn and update, sending aggregated model updates to a central repository. This was a significant milestone in our FL journey, as it meant we could preserve user privacy while ensuring individual models could still benefit from collective learning.

We then moved on to integrate more sophisticated functionalities, such as model serving and communication protocols. We selected gRPC, a high-performance, open-source framework developed by Google, as our protocol of choice for communication between the server and the client models. This enabled efficient, robust, and secure transmission of data updates.

Further developments of FAITH’s Federated Learning

Presently, our focus is on a more ambitious goal – deploying the fully-featured FL model across OpenStack, a powerful open-source software platform for cloud computing. This allows us to work with multiple clients, scaling our operations while preserving the benefits of Federated Learning.

We want to contribute to advancing this pioneering field of Machine Learning: we believe in a vision where AI and Machine Learning do not benefit through compromising user privacy.

Navigating through the challenges of Federated Learning, a rapidly evolving field, has been an enriching journey. The tools and concepts in this space are developing swiftly, and FAITH is at the forefront for Federated Learning application in healthcare, adapting and evolving with the changing ecosystem.

Authors: Gary McManus, Christine O’Meara, Philip O’Brien (Walton Institute), Stefanos Venios (Suite5).